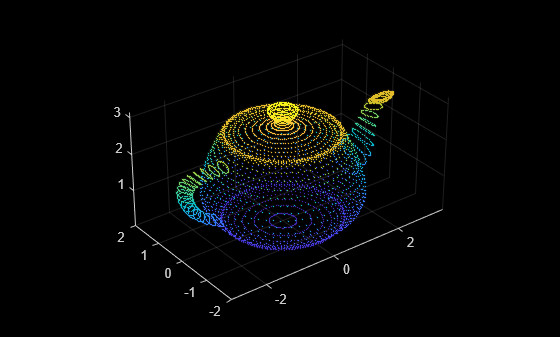

Moreover, point clouds are by nature occluded and sparse: some parts of the 3D objects are simply hidden to the sensor or the signal can be missed or blocked. The input’s high dimensionality and unstructured nature as well as the small size of available datasets and their levels of noise poses difficulties. Thanks to the rich feature representations inherent in 3D data, deep learning on point clouds has attracted a lot of interest over the past few years. Hence applying machine learning techniques directly to point cloud inputs is very appealing: it avoids geometric information loss that occurs when 2D projections or voxelizations are performed. Fundamentally, 2D based methods cannot provide accurate 3D position information which is problematic for many critical applications like robotics and autonomous driving. This format has become very popular as it preserves all the original 3D information and does not use any discretization or 2D projection. A point cloud is simply an unordered set of coordinate triplets (x, y, z). Several formats are available for 3D data: RGB-D images, polygon meshes, voxels and point clouds.

The models’ objective is to use point clouds (preprocessed from RGB-D images) and estimate oriented 3D bounding boxes as well as semantic classes of objects.

These methods go beyond existing methods in that they fully account for available depth information without increasing compute cost in a prohibitive manner.

In this blogpost, we explore Meta (Facebook)’s 3DETR and its predecessor Votenet which present a clever approach to recognizing objects in a 3D point cloud of a scene (see, and for the research articles). 3D data allows a rich spatial representation of the sensor’s surroundings and has applications in robotics, smart home devices, driverless cars, medical imaging and many other industries. Some smartphones now feature Lidar sensors (acronym for “light detection and ranging”, sometimes called “laser scanning”) while other cameras use RGB-D cameras (an RGB-D image is the combination of a standard RGB image with its associated “depth map”) like Kinect or Intel RealSense. With 3D data becoming more and more widely available that time may very well be now. The world is not flat, however, and adding a third dimension promises to not only increase performance but also make possible entirely new applications. Most computer vision applications today work with ‘flat’ two-dimensional images like the ones you find in this medium blogpost, to great success.

0 kommentar(er)

0 kommentar(er)